Cloud File Server: Smart Cache

What Is a Smart Cache?

Smart Cache is a caching method for multiple execution cores. It was designed by Intel for multi-core processors and was introduced in 2006. AMD followed in 2007 with its version of Smart Cache, Shared Cache. Smart Cache allows each kernel to dynamically leverage up to 100% of available level two (L2) cache resources. It can also fetch data from the cache simultaneously at higher throughput. By enhancing the execution efficiency of the multi-core architecture, Smart Cache increases performance and reduces energy expenditure.

Among the many capabilities of Smart Cache are the following.

- Accelerates digital applications with multi-criteria queries without impacting performance

- Handles planned and unplanned peak loads with high concurrency

- Manages multiple concurrent clients

- Scales either up or out for transactional or analytical workloads based on the CPU and RAM utilization

- Shortens the batch reporting windows

- Supports an unlimited number of advanced indexes, including nested objects, collections, compound indexes, geospatial, and full-text search

What Is the Difference Between Cache and Smart Cache?

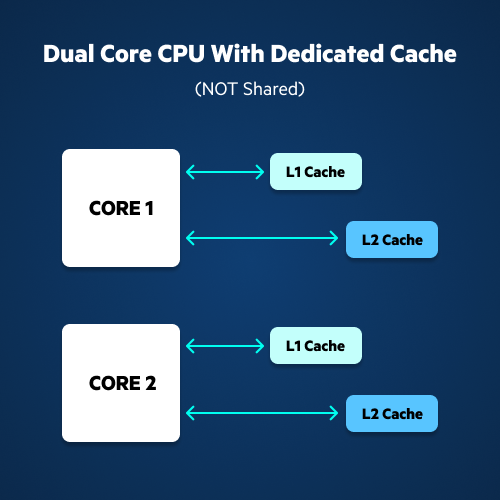

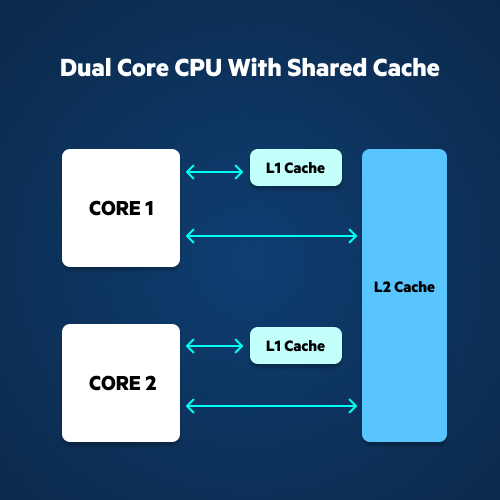

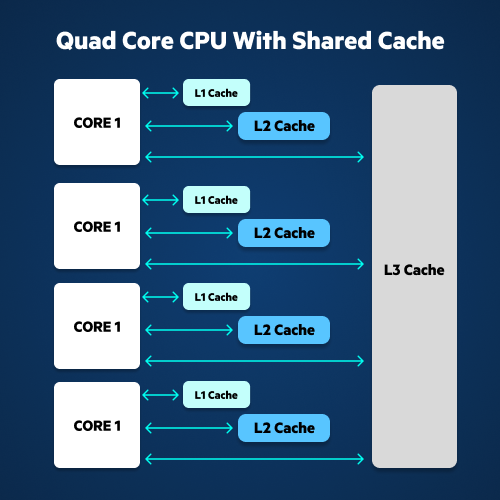

Smart Cache is an optimization of cache. It shares the actual cache memory between the cores of a multi-core processor, a single integrated circuit (i.e., chip multiprocessor or CMP) with two or more processor cores attached. By spreading across multiple processors, Smart Cache decreases the rate of misses compared to a per-core cache model.

With Smart Cache, a single core can use all available cache in level 2 or level 3 if the other cores are inactive. The result of using Smart Cache is that the shared cache makes it faster to share memory among different execution cores.

What Are the Three Types of Cache Memory?

Cache memory is a set of memory locations that store and serve data and instructions to fast-access applications. How cache memory handles data and instructions can increase the performance of a processor. Cache memory is categorized by three cache levels that describe its proximity and accessibility to the microprocessor.

1. Level one cache (L1 cache or primary cache)

2. Level two cache (L2 cache or secondary cache)

3. Level 3 cache (L3 cache)

L1 cache or primary cache

L1 cache is extremely fast, but is not designed to hold a lot of data. In most CPUs, the L1 cache is divided into two parts for the types of information that they hold—L1d, a data section, and L1i, an instruction section (L1i). L1 cache is the most expensive type of memory because its size is extremely limited.

Because it is extremely small, ranging from 2KB to 64KB of data per core, L1 cache is usually embedded in the processor chip itself as CPU cache. Because of its location, L1 cache is often referred to as internal cache. In contrast, L2 is often referred to as an external cache, because it is mostly located off-chip and must communicate with the CPU through bus lines.

A commonly cited benefit of a primary cache is that it works at almost the same speed as the processor.

Located on-chip, L1 cache can deliver an immediate speed advantage, because it can communicate directly to the processor without having to send signals across the bus lines.

Since L1 cache is very fast, it is the first place a processor will look for data or instructions that may have been buffered there from RAM (random access memory). L1 cache works much like RAM. Instructions are stored in the device’s L1 cache to access when needed. When instructions are no longer needed, they are deleted to free up space for a new set of instructions.

L2 cache or secondary cache

L2 cache is larger than L1 cache, usually 256KB to 512KB per core, and often can hold more instructions and data. However, L2 cache it is not as fast as L1 cache, because it is not as close to the core.

While L2 cache is sometimes embedded in the CPU, it can also reside on a separate chip or co-processor. When on a separate chip, L2 cache needs a high-speed alternative system bus to connect it to the CPU. This prevents any latency that can be caused by traffic on the main system bus.

L2 cache connects a device’s processor and its main memory. If an L1 cache miss occurs, the device usually jumps down to the secondary cache to find the data or instructions it needs.

L3 cache

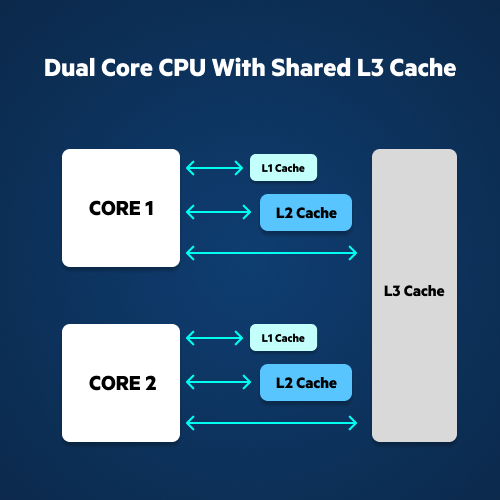

L3 cache is specialized memory designed to optimize the performance of L1 and L2 cache. It is the largest type of cache memory with a capacity that is usually between 1MB and 8MB. This means that L3 cache can hold a lot more data and instructions than L1 or L2 cache. While L3 cache is the slowest of the three types of cache, it is still faster than main memory and usually double the speed of standard DRAM.

In a multi-core configuration, each core can have a dedicated L1 and L2 cache and share L3 cache. Usually, L3 cache serves L1 and L2 cache at the same time. This allows L3 cache to facilitate and expedite data sharing and inter-core communication.

Is Cache the Same as SRAM?

Yes, in general, all types of cache are implemented with SRAM. It provides low-latency access blocks of data that have been accessed recently. When a file is requested from a cache, and the cache can fulfill that request, there is a cache hit, and the data is supplied by the SRAM.

Although L1, L2, and L3 cache use SRAM, the SRAM does not use the same design. SRAM for L2 and L3 cache is optimized for size with the goal of increasing the capacity based on manufacturing chip size limitations and reducing the chip cost based on capacity. SRAM used for L1 is most commonly optimized for speed.

All computer systems, including on-premises servers as well as servers located in private data centers, cloud-based servers, and desktop PCs have some amount of very fast SRAM.

Smart Cache Speeds Cloud File Server Access

A Smart Cache solution helps ensure that users have fast access to content even when they work with large files at locations with low bandwidth. With a Smart Cache solution, cache can be done automatically for frequently accessed files to the on-premises storage for access at LAN speed.

When cached content is no longer in consistent use, it can be released to free up storage space. In addition, specific folders can be synced to ensure access in the event of network downtime. Using a Smart Cache solution makes it easier to manage storage footprints and provides users with fast access to the data they need the most.

Egnyte has experts ready to answer your questions. For more than a decade, Egnyte has helped more than 16,000 customers with millions of customers worldwide.

Last Updated: 12th April, 2023