AI Designed to Extract Insights From Your

Organization's Content

insights, create summaries, and perform actions via a simple, intuitive interface built into users’ workflows.

Reduce Security Risks

Improve Insight Accuracy

Elevate Workflows

Increase the speed at which work can be completed with AI built into existing workflows.

Powerful Intelligence Features

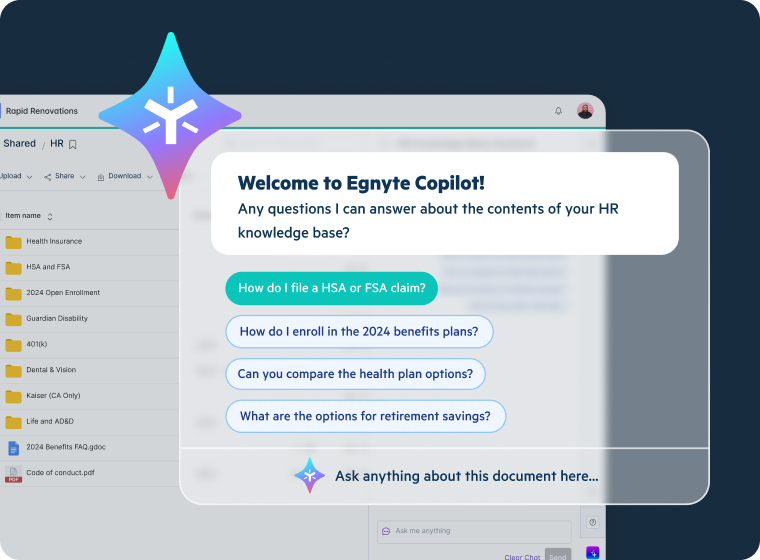

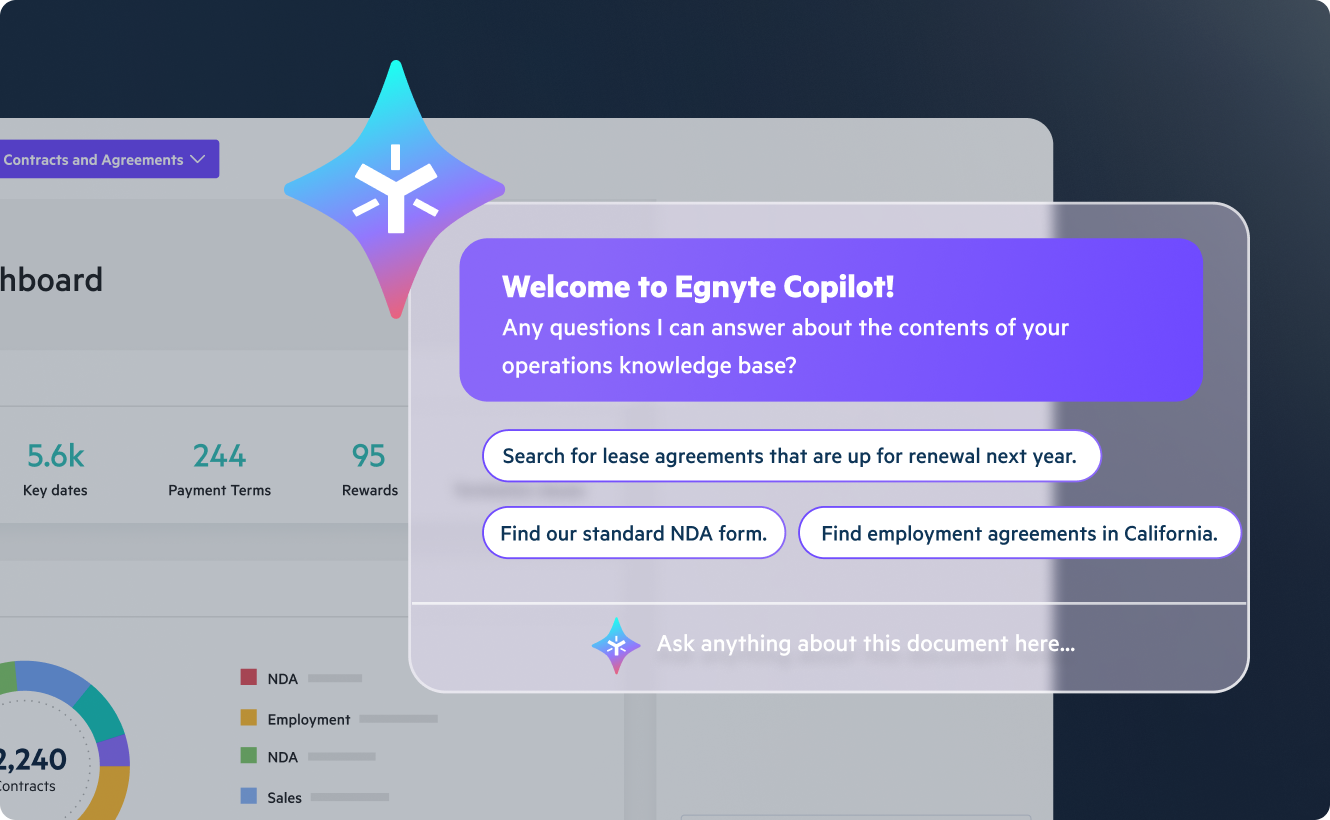

Conversational AI With Copilot

- Save time and reduce workload with instant document summaries

- Drive informed decisions with fast insights generated from your content

- Craft better prompts with one-click Prompt Wizard

- Boost team efficiency with shared prompt libraries and custom prompts you can save

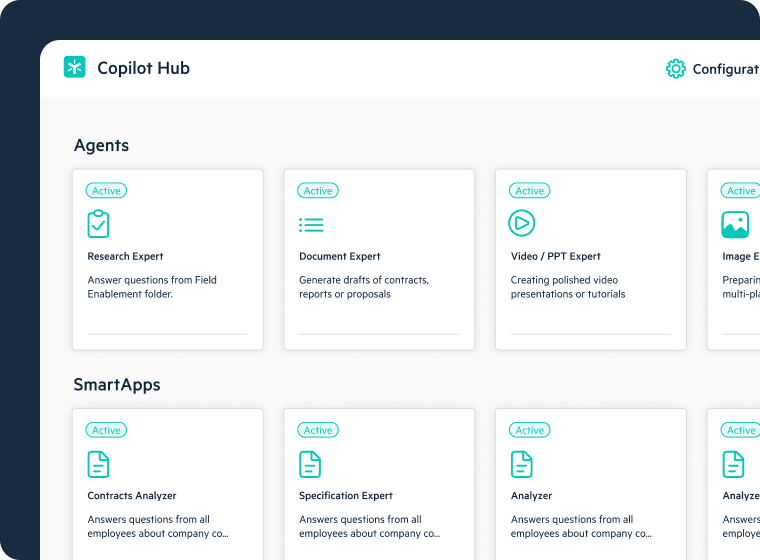

AI Agents

- Easily customize AI agents to your workflows

- Automate workflows for role-specific tasks with AI agents

- Enhance quality, speed, and strategic impact with project-specific agents—Specifications Analyst and Building Code Analyst

- Extract contract clauses instantly and simplify legal language with Contract Analyst

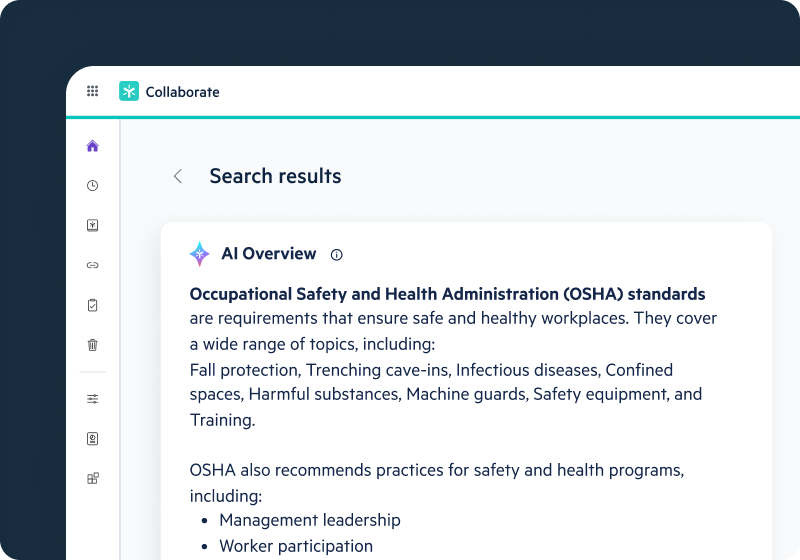

AI Search

- Generate AI-driven summaries from top-ranked results

- Discover content with unified search across recent files, bookmarks, and folders

- Upload an image to find visually similar images easily

- Access photos tagged by geolocation within a designated area and other image metadata

- Transcribe AV files for easy search and enhanced content analysis

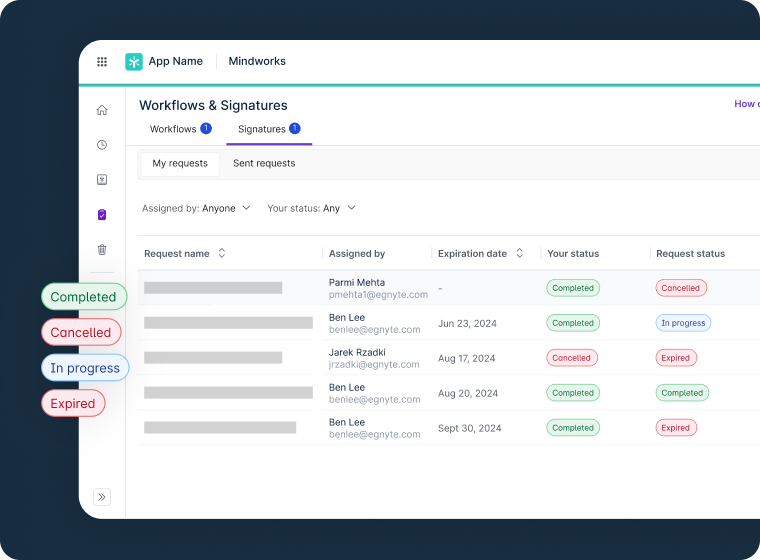

AI Workflow

- Automate work processes by combining AI with workflows

- Initiate a new workflow based on AI-applied metadata

- Trigger an AI agent action as part of a workflow step

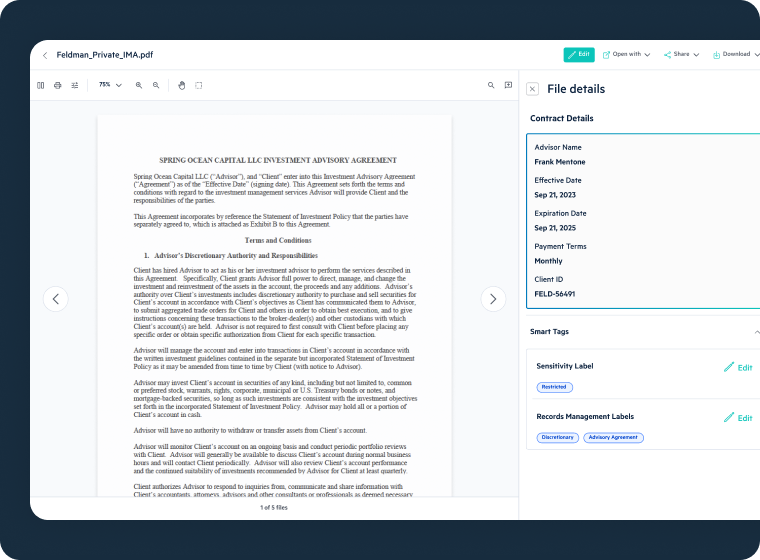

Extractors

- Use industry-specific AI/ML to extract metadata from images and documents

- Integrate exported metadata via API in JSON or CSV

- Enhance organization and workflows with customized reports to boost productivity

Smart Tags

- Scan and label content for better organization and protection

- Auto-tag documents and images for improved discovery and search

- Generate labels like Confidential or Legal to improve organization and detection by DLP and CASB systems

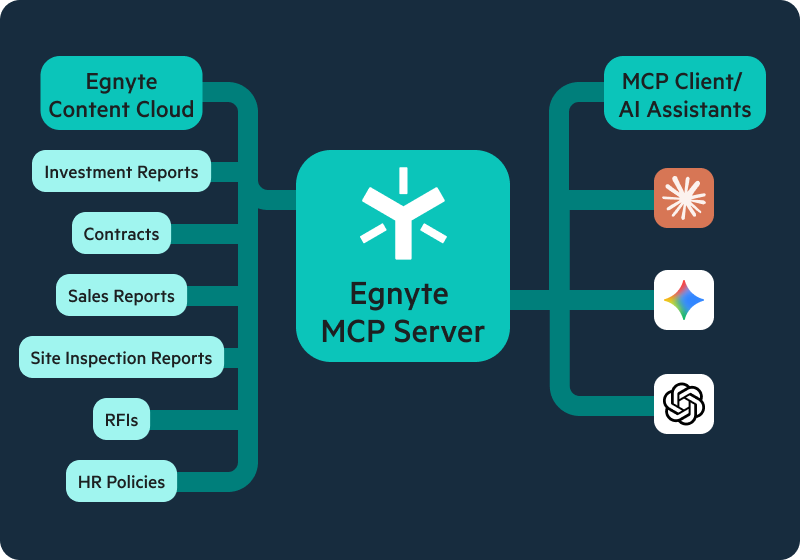

Egnyte MCP Server

- Unlock AI insights while keeping sensitive content secure and compliant

- Connect assistants like ChatGPT, Claude, and Copilot to your enterprise data

- Deploy on your terms with open-source or fully managed options

- Ensure AI access respects your existing permissions and AI tools only see authorized content

Hear From Our Customers

See Egnyte Copilot In Action

Boost productivity and improve decision-making with generative AI features that:

- Instantly summarize files, ask questions in natural language, and generate new content

- Share prompt libraries across your teams and create custom prompts to enhance productivity

- Discover and extract insights from multi-modal content like images, designs, and audio/video

You Might Also be Interested In

FAQs

Content intelligence uses AI to analyze and organize files based on their content, context, and usage. It supports data governance by providing visibility into sensitive information, reducing data sprawl, and helping enforce compliance policies across cloud and on-premise environments.

Businesses can reduce unstructured data risk by scanning files for sensitive information, such as PII or health data, and applying automated policies for access, classification, or retention. Content intelligence tools help uncover hidden risks and minimize exposure from unmanaged content.

AI-powered classification helps automatically categorize data based on sensitivity, usage, or compliance needs. This reduces the manual burden on IT teams, improves accuracy, and enables faster, more informed decisions around file access, storage, and policy enforcement.

Organizations can detect sensitive data by using tools that scan file repositories for specific data patterns (e.g., social security numbers, credit card info, or health records). These tools provide dashboards and alerts, helping teams manage access, track movement, and ensure compliance.

Automation helps manage the content lifecycle by flagging outdated or redundant files, applying retention policies, and enabling timely archiving or deletion. This reduces clutter, optimizes storage costs, and limits the surface area for potential data breaches.

Content intelligence supports compliance by continuously scanning files for regulatory-sensitive data, providing audit trails, and allowing policy-based actions. It enables organizations to stay ahead of regulations like GDPR, HIPAA, and CCPA by proactively managing at-risk content.

Yes, AI can analyze file access patterns and user behavior to detect anomalies, flag unusual activity, and prevent unauthorized access. This enhanced visibility allows organizations to identify potential threats early and take corrective actions faster.

Egnyte detects and classifies sensitive content and applies policies that restrict external sharing to unapproved destinations, including generative AI tools. This reduces the risk of employees unintentionally exposing regulated or confidential data to external AI services. In addition, organizations should have a responsible AI usage policy in effect, so that users’ expectations are correctly set, including which commercial AI solutions are approved for sensitive data processing.

Egnyte enables role-based access, time-limited permissions, and detailed activity tracking for contractors and temporary workers. This ensures they only have access to authorized content and access is automatically revoked when no longer needed.

EgnyteGov supports CMMC-aligned controls for protecting Controlled Unclassified Information (CUI) and Federal Contract Information (FCI), including access control, auditing, encryption, and policy enforcement. This helps organizations in the defense industrial base meet CMMC requirements, with a FedRAMP Moderate Equivalent technology partner.

A platform with FedRAMP Moderate Equivalency, such as EgnyteGov, demonstrates strong U.S. Federal government-grade security controls. This provides additional assurance that the platform meets rigorous security standards relevant to CMMC and regulated federal environments, including encryption standards.

Egnyte enables detection and classification of sensitive content and applies policies that prevent or restrict sharing of that data to external or unapproved AI tools. This helps enforce corporate and regulatory data protection policies. AI safeguard capabilities can also limit users’ visibility of sensitive content, based on their individual permissions.

Egnyte confirms that Microsoft Copilot only has access to authorized, governed content. By applying classification, access control, and sharing policies, organizations can prevent Copilot from surfacing or using sensitive or unauthorized documents.

Egnyte helps organizations prepare content for AI usage by organizing, classifying, and governing files that contain sensitive content. This ensures AI tools can access high-quality, approved content while sensitive data remains protected. In addition, a responsible AI usage policy helps to make users’ data protection expectations clear, as they manage sensitive content in the AI era.

Egnyte provides centralized governance and monitoring to control what data can be shared with generative AI tools, including AI safeguards. This helps organizations balance AI productivity with strong data protection. In addition, a responsible AI usage policy helps to make employees’ data protection expectations clear, as they manage sensitive content and projects in the AI era.

Egnyte applies consistent access, classification, and audit controls across all content, enabling AI assistants to operate within approved security and compliance boundaries. Responsible AI usage policies help to make users’ data protection requirements clearer, as they manage sensitive content and projects in the AI era.

Egnyte creates a centralized, governed content workspace that supports AI adoption while enforcing security, compliance, and visibility across all file activity. From a non-technical perspective, responsible AI usage policies make users’ data protection expectations clearer, as they manage sensitive content and projects in the AI era.

Egnyte provides detailed audit logs and reporting on file access and sharing. This enables organizations to track which users and systems are accessing content, to support governance and compliance oversight.

Egnyte helps prevent confidential files from being shared externally or exposed to AI tools by applying document and governance classification policies and strict sharing policies. Responsible AI usage policies also make users’ data protection requirements clearer, as they manage confidential content in the AI era.

Egnyte applies encryption, access control, classification, and governance policies to files used in AI workflows. This facilitates sensitive data protection, even as AI systems process content. Responsible AI usage policies go a long way in making users’ data protection requirements clearer, as they manage sensitive workflows in the AI era.

Egnyte enables controlled AI adoption by enforcing data governance, access control, classification, and auditability. This allows organizations to use AI tools productively while meeting regulatory and compliance requirements. Responsible AI usage policies are mission-critical in sensitive data environments, as they make users’ data protection requirements clearer in the AI era.