Egnyte Joins the Pax8 Marketplace

MOUNTAIN VIEW, Calif., October 29, 2025 – Egnyte, a leader in secure content collaboration, intelligence, and governance, today announced its solutions are now offered through Pax8, the leading AI commerce Marketplace. Egnyte’s inclusion in the Marketplace provides Pax8's global distribution network of Managed Service Providers (MSPs) with an unparalleled opportunity for MSPs to deliver higher-value cloud services centered on collaboration, intelligence, security, and governance.

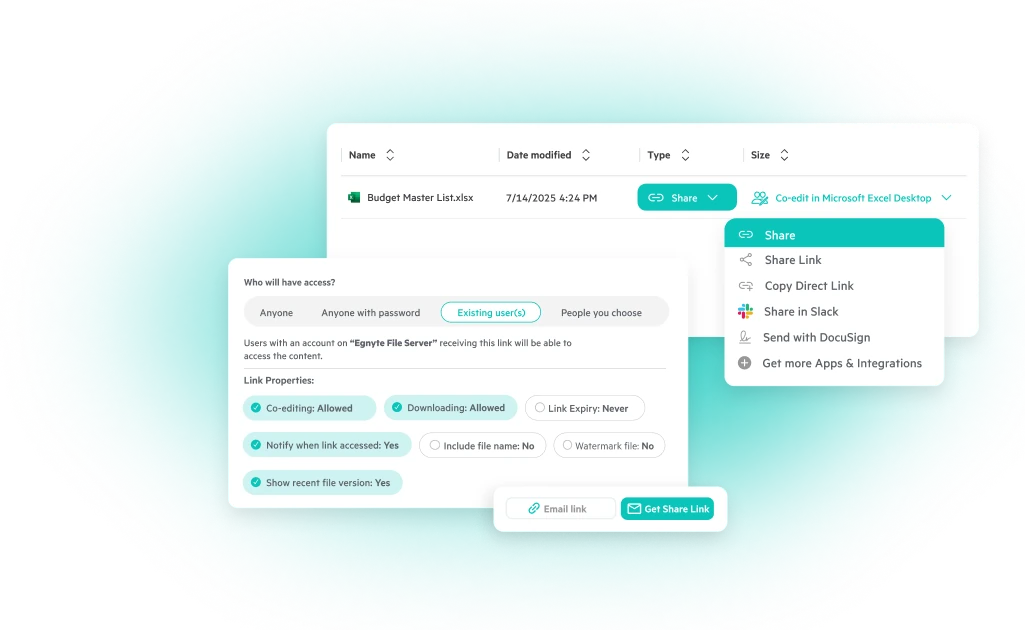

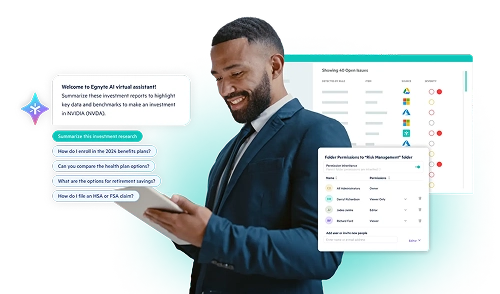

“Today’s announcement with Pax8 marks the initiation of bringing Egnyte’s AI-powered secure collaboration platform to over 40,000 MSPs,” said Stan Hansen, Chief Operating Officer of Egnyte. “Egnyte’s hybrid cloud capabilities and native desktop integrations empower MSPs to move beyond basic file sharing and offer high-value services in collaboration, intelligence, security, and governance. We’re combining powerful AI, seamless Microsoft integration, and deep hybrid cloud experience to unlock new growth and profitability opportunities for Pax8 partners around the world. This provides MSPs with the flexibility to meet their clients wherever they are on their cloud journey, ensuring secure collaboration without disrupting workflows.”

Egnyte’s platform helps MSPs seamlessly migrate customers from on-premises file servers to the cloud, modernizing their content management without compromising performance or compliance. MSPs leveraging Egnyte report, on average, a 30% reduction in support tickets, driven by enhanced usability and automation.

Egnyte is one of a few partners that integrate directly with Microsoft 365 and Azure while meeting the stringent standards of Microsoft’s CSPP+ certification program. This ensures that MSPs can deliver secure, compliant, and performant collaboration, especially for large-file workloads and unique applications common in industries like construction, design, financial services, media and entertainment, manufacturing, oil and gas, and life sciences.

“We are excited to welcome Egnyte to the Pax8 Marketplace and further strengthen our commitment to deliver intelligent and secure collaboration solutions to our global network,” said Oguo Atuanya, Corporate Vice President of Vendor Experience at Pax8. “Egnyte’s AI-powered platform, hybrid cloud capabilities, and seamless Microsoft integrations enable our partners to elevate their cloud offerings and meet the evolving needs of their customers, unlock new growth opportunities, and drive greater value.”

Egnyte's addition to the Pax8 Marketplace is a key step in Egnyte's channel expansion strategy. Earlier this year, Egnyte enhanced its Partner Program - redesigned to better equip partners with robust training and sales resources and support co-selling success as part of its commitment to supporting a global partner network that reflects Egnyte’s core partnering principles.

To see Egnyte’s inclusion in the Pax8 Marketplace, click here. To learn more about Egnyte’s partner program, click here.

About Pax8

Pax8 is the technology Marketplace of the future, linking partners, vendors, and small-to-midsized businesses (SMBs) through AI-powered insights and comprehensive product support. With a global partner ecosystem of over 40,000 managed service providers, Pax8 empowers SMBs worldwide by providing software and services that unlock their growth potential and enhance their security. Committed to innovating cloud commerce at scale, Pax8 drives customer acquisition and solution consumption across its entire ecosystem.