Transcoding: How We Serve Videos at Scale

With the rise of social networks and mobile, videos have become one of the most dominant content types. Various studies show that user engagement is the highest with videos which appeal to the new era of consumers. Also, there is an inherent expectation that the content consumed is not only available instantly but also that there is continuity as users jump from one device to another. This makes video storage, serving and playing a challenging problem to solve cost-effectively at scale. Streaming and playing videos over the internet is far more complicated than downloading other file types and loading them in a native application. The various problems with serving and playing video are:

- Video files sizes typically range between hundreds of megabytes and multiple gigabytes. This makes it difficult to download the whole video and play it. Users also may not want to watch the entire video, but just some parts. It does not make sense to wait for the entire file to be downloaded.

- Native support for playing videos is very limited. For example, Windows does not play mov files and Apple devices do not play wmv files.

- Videos with the same container type (mp4, mov, wmv, etc.) can have different codecs and not all codecs can be played on all devices.

- High-resolution videos are not suitable for fast streaming on mobile devices with limited bandwidth.

The solution to the above problems is Video Transcoding, which is the process of converting a video file from one format to another and making it viewable across different platforms and devices.

There are 2 types of streaming options: adaptive bitrate and static bitrate. The adaptive bitrate video experience is superior to a static bitrate because the video stream can be adjusted mid-stream based on the client’s available network speed to avoid buffering or interruption in playback. The adaptive format that we were interested in is called HTTP Live Streaming (HLS), which has emerged as the standard in adaptive bitrate video.

The next step was to decide when we should transcode videos - we considered two options:

- Transcode videos asynchronously after they are uploaded. This would require us to transcode all incoming videos, including those that may never be previewed in the future, and would require an SLA-drive architecture to find the right balance between preview availability and cost.

- Transcode on the fly when a user hits “Play.” This would reduce the amount of video that needs to be transcoded, but video transcoding for large videos can be slow, so it would require high-end machines or even expensive GPUs to meet user expectations.

Our data team helped us get the answers here. It turns out that 20% of all our data is video, and 40% of our users use mobile devices, so we started looking at how they shared, previewed and streamed videos. We found that the majority of users previewed videos at least a few hours after upload, so that gave us enough room to transcode asynchronously.

Next, we looked for most frequent video types that are actually streamed:

We were pleasantly surprised to see that most of the videos streamed were mp4, which has broad support to play natively on most devices. So we could prioritize transcoding .mov videos and fall back to native support for mp4 when transcoding hasn’t finished yet.

So with some data analysis, we decided to run video transcoders asynchronously after an upload or share link creation and build an SLA-driven architecture around it.

Problems and their Solutions

Breaking down the problem of delivering streaming video to our users, we built a pipeline as follows

Uploading and storing large videos

Egnyte Object store already does this well, we have petabytes of content, including videos stored by our users.

Notifying Transcoder Jobs

As soon as a video file is uploaded, we wanted to notify the transcoders and we needed a good messaging system. We were already using RabbitMQ, Redis Pub/Sub and Scribe, but this use case was different as we wanted video transcoders to be deployed anywhere in the world. This could be in an Egnyte-managed datacenter or within public clouds to scale up for load spikes. This meant we needed a global messaging system, such as Google Pub/Sub or Azure ServiceBus, to notify transcoders when a new file is uploaded.

Using a messaging bus came with its own set of challenges. Video transcoding jobs can run for hours and we needed to be able to hold the lease on a message during this time. While transcoding, the message bus should not redeliver the same message to another client, so we needed longer lease times. For example, we started with the latest Google PubSub client but had to revert to using a previous version, since the latest one would not allow us to renew the lease for a longer duration.

Video Transcoding

This is a difficult problem to solve at scale. Our first attempt was to see if we could outsource video transcoding to a partner with an off the shelf product, but the costs were too high. In fact, our analysis showed that it was 90% cheaper to run the video transcoders inhouse. This led us to the conclusion that we would need to roll up our sleeves and build this in-house for both compliance and cost reasons.

To transcode video to HLS, we chose FFmpeg. FFmpeg is a collection of libraries and tools to process multimedia content such as audio, video, subtitles and related metadata. FFmpeg is by far the best free software available for transcoding videos and it has very good HLS support. FFmpeg can natively create the various HLS segments at different resolutions.

After detailed testing, we chose the following ffmpeg command line parameters:

Our video transcoding job outputs the following files:

- stream.m3u8 - Master playlist containing the lists of all resolutions and playlists that Egnyte supports and their bandwidth recommendation

- <resolution>.m3u8: For each resolution supported by Egnyte and listed in stream.m3u8, for example, 640x480, a 480p.m3u8 is generated which is a playlist for the specific resolution.

- Video Segments: smaller fixed time video segments for each of the resolution supported. Unlike *.m3u8 files, these are actually video files and not metadata files.

Sample generated m3u8 files are as follows:

stream.m3u8:

480p.m3u8

720p.m3u8:

Scalability

The next challenge we had to solve was scaling video transcoding jobs on demand. Run-time of video transcoding jobs are very asymmetrical, meaning jobs processing smaller videos could finish quickly but larger jobs could take an hour, queuing up other jobs behind them. It was clear to us at the outset that we needed to deploy our video transcoding jobs on a platform that could adjust dynamically based on the current backlog and seasonality.

Our first preference was to leverage a serverless architecture for deploying video transcoders. We liked the idea that we could build this on Cloud Functions, scaling automatically without worrying about backlog and load spikes. However, we soon realized that it was too early to leverage these services at scale. Video transcoding is a CPU-intensive operation and needs specific hardware like dedicated CPUs (or even GPUs) with enough memory and native ffmpeg installed on it. It was challenging to build this on serverless, and we determined that Kubernetes suited us better, so we created Alpine docker containers of FFmpeg + Python and deployed these within Kubernetes. Plus, since we already used Kubernetes within Egnyte there was a smaller learning curve.

We found that video transcoding jobs run faster on GPUs, but doing this isn’t cost effective. The best trade-off between speed and cost was allocating 4 CPUs to each video transcoder job. At 4 CPUs, we were able to process videos at about 25-40% of the video play time. In other words, a 1-hour video would take about 15-25 minutes to transcode. Adding more CPUs to a video transcoder job did not produce linear benefits so it was best to stick to 4 CPUs per job and instead provision more jobs.

We deployed custom metrics based autoscalers which would look at the current backlog in the video transcoding queue and deploy more jobs as needed. The autoscaler would reduce the number of jobs when backlog was down. Autoscaler is the most important part of managing costs and user experience. Without autoscaler, it is impossible to provide a good transcoding SLA without over provisioning and thus escalating costs.

Transcoders have one more challenge - they can get stuck every now and then. To solve this, we have built-in health monitors in our transcoder docker containers. If the health check fails, the Kubernetes cluster restarts the container. Determining if a transcoder is stuck is not easy - we have a few quirks around it.

- We fail the health check if the transcoder hasn’t received any message from the messaging bus topic for a while and there are still outstanding messages in the topic

- We fail the health check if the FFmpeg job is stuck i.e. is not producing any more HLS segments but is still running

Restarting a transcoder puts the message it was holding back into the backlog after the lease expires and gets picked up by the next available transcoder.

Deployment of the service also needed to be optimized for the target public cloud. While we were building this service, Google added support for preemptible nodes in GKE - Google’s K8s cluster. Preemptible nodes are cheaper than regular ones, the downside being that they could be restarted often to be relocated on a different infrastructure pod in Google. Considering we built our transcoding service to be globally deployable and scalable across local and public clouds based on our needs, GKE with preemptible nodes suited us for specific workloads and cut our costs further. We built our GKE instance with both preemptible and regular nodes making sure our required number of transcoders are always available irrespective of preemptible availability, yet keep costs down by using them when they are available.

Storing transcoded videos

As seen above, transcoding is a very expensive operation, so it makes sense to retain transcoded objects for some reasonable amount of time. Depending on the video quality uploaded, transcoded videos could be anywhere from 20% to 50% of the original size. At our scale, this is also an interesting problem.

We needed a long-term storage option for the transcoded videos; one that is durable and scales to handle petabytes. Object life could be variable, in some cases it could be for a few days, but in most cases it would be months. To solve this problem, we needed something we termed a Derived Object Store - an object store that would store derived objects like transcoded videos, whose life cycle depends on the primary object uploaded by the user, but availability could be a bit relaxed.

At the time, our core object store did not support storing derived objects, but we were already scaling it to billions of objects and petabytes of data. We knew how to manage it and monitor it well, and it was a perfect place to store the transcoded object. So we went about adding the Derived Object Store feature to our core object store and solve one more piece of the transcoding puzzle.

Serving transcoded videos

Serving streaming videos to users is different from serving API or web UI requests. Video segments are usually larger and long-running, and can result in sudden bursts when videos go viral. Considering the unpredictable nature of video requests, we decided to keep video serving completely isolated from our regular traffic.

Our video service is based on OpenResty, with all authentication and video discovery written in Lua. We deployed our video service with a cache on a dedicated infrastructure fronted by a dedicated domain name such as media.egnyte.com. This video service does not share any infrastructure components like firewalls, switches, and ISP links with our primary services which allows us to scale the video service and rate limit users purely on our video needs.

Since video service nodes were mostly doing authentication, authorization and serving videos, we had to scale them based on current running connections. To achieve this using Kubernetes HPA, we had to send the custom metric “active_connections” from OpenResty to Stackdriver. We did this by deploying two containers along with video service OpenResty in the video service pod

- nginx/nginx-prometheus-exporter - Export nginx metrics to Prometheus (a monitoring tool)

- gcr.io/google-containers/prometheus-to-sd - Export Prometheus metrics to Stackdriver

Once we had the active connections metric in Stackdriver, we deployed a custom-metric HPA to scale up video service pods when active connections increase.

Finally, when put together, this is how our overall architecture looks:

Monitoring

Monitoring is key to any production scale system, and transcoding and serving videos is no different. There are several things to monitor and we do that using metrics seeded by our transcoders and mining stats of other components like pubsub topics and video service OpenResty servers.

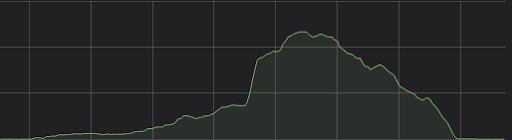

From a transcoding perspective, the key metric to measure is the backlog, as this directly affects our users. We monitor the number of messages outstanding in the pubsub topic. At any point of time, if there are too many messages in the queue waiting to be processed, the autoscaler adds more transcoders. As seen below, as the number of outstanding messages increases, we increase the number of transcoders. As the backlog comes down, we reduce the transcoders.

Number of messages:

Number of Transcoders:

Our SRE team also gets notified when the backlog is beyond a certain threshold and if autoscaler is not able to keep up to it due to max transcoders allowed, SRE then makes an informed decision if autoscaler limits need to be bumped.

We monitor transcoding speed and transcoding lag that helps us gauge user experience.

We monitor videos processed as well to understand the traffic patterns and costs.

This is how we solved a long-standing problem of streaming videos across devices to our users in a cost-effective and compliant way. The next phase in this project is finding the best way to deliver high-performance transcoding of videos on GPUs in real time for recently-uploaded videos, to serve them immediately.

Interested in being a part of our amazing team at Egnyte? We are always hiring and solving interesting problems, so check out our Jobs Page or contact us at jobs@egnyte.com

Get started with Egnyte today

Explore our unified solution for file sharing, collaboration and data governance.

LATEST PRODUCT ARTICLES

Don’t miss an update

Subscribe today to our newsletter to get all the updates right in your inbox.

By submitting this form, you are acknowledging that you have read and understand Egnyte's Privacy Policy