Evaluating MySQL Recursive CTE at Scale

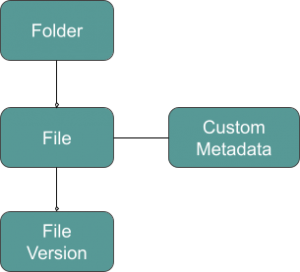

Egnyte is a unified platform to securely govern content everywhere. We manage billions of files and petabytes of content. One of the core infrastructure components powering such a scale is called MDB or metadata database. It is a cluster of hundreds of MySQL instances storing billions of metadata records. It stores information about files, versions, folders, custom metadata, and their relationships. We are a multi-tenant SaaS application and serve tens of thousands of SMB customers storing anywhere from 1M-10M files and many enterprise customers storing more than 100M files.

Users create, edit, and collaborate on the business-focused content using the Egnyte Platform. Our databases, in particular the MDB cluster, receive billions of calls per day in spite of heavy application caching. One of the most common ways our users access content is by file or folder path. This results in 100s of millions of calls to the cluster to retrieve a folder object by path, making it the heavily used DB query.

When we moved from BerkelyDB—a NoSQL solution—to MySQL, we had to make a design choice to pick between two table schema options:

- folder_id, parent_id, folder_name

- folder_id, parent_id, folder_name, path

Since MySQL 5.7 and the versions prior didn’t have fast native support for querying hierarchical data like folder tree, if we had picked design number one, we would have to make multiple calls to find a folder by the path and pay a penalty on every read. If we had picked design number two then upon writing, we would have to pay a penalty for denormalizing this data.

As our number of reads outweighs the number of writes, we decided to go with design number two and denormalize the path in each folder entity. Now each folder row has the full path of the node in the folder tree. While this worked fine, for the most part, it wasn’t without its limitations. The first being the key/index length. MySQL supports the key length of up to 255 characters with utf8mdb3 charset. With utf8mdb4, it’s reduced to a mere 191 characters. And this makes it hard to index and search for paths that are longer than these key limitations, and for such queries, the DB server ends up doing an index-range scan with high latency.

Another issue that we often deal with is table row mutation, especially when a top-level folder is moved or renamed. Many of our customers organize their projects in monthly folders, and one of the patterns is that they start with a current folder, which they’ll collaborate on until the end of the month and then rename it to a format that adds the month/year into the name. As can be seen here, this action results not only in the top-level folder row update, but also updates all folders in that folder hierarchy, mainly to modify the denormalized path that’s stored with the folder. In some cases, the number of folders updated just for the top-level folder rename/move runs into hundreds of thousands. And as a side effect, this puts a strain on the master database, causes replication lag, and issues in the caching layer.

As larger customers onboard, they land and expand, and as a result, the data stored in our tables for existing customers grows, it’s imperative that we keep many of our largest tables as dormant as possible. For the folder table, most of the mutation occurs due to the explosion of writes caused by path denormalization. We can keep it dormant by removing the path column, but this will bring back the multiple-call problem.

During the planning to move the database clusters to MySQL 8, one of the features that caught our attention were common table expressions.

A common table expression (CTE) is a named temporary result set that exists within the scope of a single statement and that can be referred to later within that statement, possibly multiple times. The following discussion describes how to write statements that use CTEs.

One aspect of CTE is that the named temporary result can be referred to and added to within the statement, much like a recursive call, making it a great feature for working with hierarchical data. This is called a recursive CTE. An example of one is as follows:

WITH RECURSIVE trs (n) AS

(

SELECT 1

UNION ALL

SELECT n + 1 FROM trs WHERE n < 5

)

SELECT * FROM trs;

Here, we start with a value of 1 in the temporary result set `trs` with just one field `n`. Then we keep adding (n + 1) to it until we no longer add any after the n reaches 5. Then we retrieve all rows from the temporary result set trs. It produces the following output.

+------+

| n |

+------+

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

+------+

How could it help us?

As alluded to above, to serve path based calls, we needed a way to not store the entire path with a folder and still be able to query all the information from the DB in one call. With recursive CTE, we can do exactly that. Consider the following table.

CREATE TABLE folders

(

id BIGINT PRIMARY KEY,

name VARCHAR(255),

parent_id BIGINT,

ctime TIMESTAMP,

mtime TIMESTAMP

);

Now, in order to fetch a folder by a given path, all we need to do is fire a recursive CTE query that recursively traverses down the folder hierarchy until it hits the node we attempt to get. So, the query would look like this:

with recursive

cte as (

select id, path_element(@path, 1) as child, to_varchar5000('') as path, 1 as count

from folders

where parent_id is NULL

union all

select f.id, path_element(@path, c.count + 1) as child, concat(c.path, "/", f.name) as path, c.count+1 as count

from folders f join cte c on f.parent_id = c.id

where f.name = c.child

)

select * from cte where child = '';

Of course, this requires a couple of custom functions; path_element -> to return a path element at a given position in the given path and to_varchar5000 -> to convert an empty string to a type of varchar(5000), which is required as we expand on the path by appending it to. Otherwise, the query would fail with the following error.

ERROR 1406 (22001): Data too long for column 'path' at row 1

How this query works:

We first seed the temporary result set, cte, with the id of the root folder in the system (parent_id IS NULL) and the name of the child node we want to look for. Then, we expand on it by finding the child and recursively invoke it until we no longer add to the result set. At that point, we would be either at the leaf, or we wouldn’t have found the requested node. Once the temporary result set is produced, we pick the row which has an empty child field in the result set.

For example, consider querying for a folder in the following table with a path: /storage_f1/marketing/campaigns/2019/europe.

idparent_idname1NULL<empty>21storage_f132sales42marketing53pipeline65Q374

campaigns84events972019109europe119asia

The seed query will produce a result set

idchildpathcount1storage_f1 1

then the first run of the expansion/recursive query will add the following

idchildpathcount1storage_f1 12marketing/storage_f12

And, this will continue expanding it until it reaches the leaf node (europe)

idchildpathcount1storage_f1 12marketing/storage_f124compaigns/storage_f1/marketing

372019/storage_f1/marketing/compaigns49europe/storage_f1/marketing/compaigns

/2019510/storage_f1/marketing/compaigns/2019/europe6

Beyond this, it won’t expand the result set as we have reached the left node.

So, now we can perform the final query on the temporary result set. That is, find the row with the requested path. This will also be the row with the empty child’s name.

If a non-existent path is queried for -- for example, /storage_f1/marketing/compaigns/2020 --, the following is what the temporary result set would look like, and we won’t find a row for the requested path.

idchildpathcount1storage_f1 12marketing/storage_f124compaigns/storage_f1/marketing

372020/storage_f1/marketing/compaigns4

We started evaluating the performance of Recursive CTE vs. our existing denormalized path-based query, and below are some of the use cases and our benchmarks. The following benchmarks are very specific to how our schema is designed and how we organize data internally and may not be representative of the typical Recursive CTE performance. We used a folders table with 9 million rows. A custom-configured GCE instance was used with 32 CPU, 128G RAM, and 3.5T SSD Disk. The MySQL 8.0 instance was allocated a 96G buffer.

Retrieve a folder by path:

This is the case we saw an example of above. When the queries were executed directly in MySQL 8, the CTE method was slower by a factor of ~2. At the application level, considering the network overhead, the slowness factor slightly came down to ~1.7. Even though the CTE is expectedly slower, most read-cases can be scaled with caching, and with some of our write use cases involving mutating hundreds of thousands of folder entries, CTE may prove to be beneficial, as we would need to just update one or a few folders when a top-level folder is moved or renamed.

With longer paths that would not be able to be served entirely using index due to key length limitation, the recursive CTE method was outperforming the path query by a factor of ~10. The longer the path, the more performant the recursive CTE got. For example, between paths of depths 12 and 114, the numbers for recursive CTE went from negative to higher positive as compared to that of the path-based queries

Path depthBy denormalized pathBy Recursive CTE120.52 msec1.05 msec114171 msec2.1 msec

Retrieve a folder by id:

While this may seem straightforward at first, without the path column, we need to construct the path as we retrieve the folder information for the given id. We can take a bottom-up approach, in which we first find the record for the given id, and then we navigate up the folder tree and construct the path until we reach the system root folder.

with recursive

cte as (

select id, parent_id, to_varchar5000(name) as path

from folders

where folder_id = @folderId

union all

select c.id, f.parent_id, concat(f.name, '/', c.path) as path

from folders f join cte c on f.id = c.parent_id

where c.parent_id is not null

)

select * from cte where parent_id is null;

When ran against a dataset of 9M folders, a batch query to list folder information for 10+ folder ids by the traditional method produced results in 0.6 msec whereas the Recursive CTE took 1.3 msec, a performance hit of roughly 2x. The deeper the path, the slower the recursive CTE became. For retrieving a folder with a depth of 100, the recursive CTE was almost 5 times slower (0.62 msec vs 2.9 msec), as it had to navigate such a large tree to be able to construct the path

Path depthBy denormalized pathBy Recursive CTE80.6 msec1.3 msec1000.62 msec2.9 msec

CTE Path Resolution At Volume

Another use case that would heavily use recursive CTE is retrieving a list of all folders for bi-directional sync purposes. It requires generating a flat list of all folders on the customers’ namespace, including the full path of the folders. On a dataset of about 9 million folders, compared to the simple select query with path, the recursive CTE based build-path-on-the-go approach was 9.4 times slower when executed directly, but considering the network and application overhead in retrieving and processing the result set, the recursive CTE was only 2.8 times slower.

with recursive

cte as (

select folder_id, to_varchar5000(canonical_name) as path

from folders

where parent_id is NULL

union all

select f.folder_id, concat(c.path, '/', f.canonical_name) as path

from folders f join cte c on f.parent_id = c.folder_id

)

select folder_id, path from cte; Direct ExecutionWith Application & n/w OverheadBy denormalized path6.43 sec29.42 secBy Recursive CTE60.2 sec83.19 sec

Closing Thoughts

As mentioned earlier, despite the CTE being slower, it may help us when it comes to mutating a large number of folders, mainly due to path modifications. We’re still experimenting with MySQL CTE. We’ll first upgrade the metadata DB hosts to MySQL 8.0, and then build the CTE based path resolution. With the proposed CTE-based path resolution and the current denormalized path implementation co-existing, we’ll perform A/B testing on the performance, scalability, etc. and we’ll take it from there. We expect some things to become slower while helping scale the system, and some things to become faster. All along, we will use feature flags, collect various metrics, and decide what to materialize for the long run.