Building an Intelligent and Autonomous Search Agent at Egnyte

Finding the right information quickly is a necessity for our customers—they rely on Egnyte to manage 100s of petabytes of data, from financial reports and legal contracts to marketing assets and engineering plans.

Until now, we provided this capability through a powerful search engine equipped with keyword matching, boolean operators, and UI-based filters. But, as the volume and complexity of data grow, traditional search becomes a frustrating exercise in guesswork. A business-critical question like, “What are the key terms in the final, signed MSA for Acme Corp from last July?” triggers a familiar, painful workflow.

That’s why we’re excited to tell you about our new, intelligent Search Agent. It’s a search engine that uses reasoning to understand complex queries, execute multi-step plans, and get smarter with every interaction.

How Search Agent Helps

Without Search Agent, it could take 20 minutes of keyword variations (such as "Acme MSA" and "Acme Corp contract final"), spelunking through folders (/Contracts/2023/Signed/), and opening multiple files to find the correct file. Users needed to translate rich intent into a series of simplistic queries, navigate keyword mismatches, and sift through noisy results.

The core issue is the semantic gap—users think in concepts, but search engines match only keywords. We recognized the fundamental need to evolve beyond traditional paradigms and build a truly intelligent system that understands user intent.

We’ve bridged that gap with Search Agent, which takes one query and turns it into a single, precise answer. Search Agent is intelligent, autonomous, and built on the ReAct (Reason + Act) framework. It’s capable of understanding complex queries, formulating multi-step strategies, learning from its mistakes, and delivering a curated set of final, relevant results.

Under the Hood of Agentic Search

Egnyte provides two primary ways to find information. The first is content search with filters, which functions like a traditional search engine, searching for keywords within document text. The second, and more precise method, is metadata search.

Metadata tags are structured properties that can be applied to files and folders, adding a layer of business context. For example, an AEC project document might be tagged with a property “status” with the value “approved”. While our platform supports both search types, the real power lies in leveraging metadata for precision. Our Search Agent is explicitly designed with a metadata-first strategy, always seeking to translate a user's natural language query into a structured metadata filter for precise results.

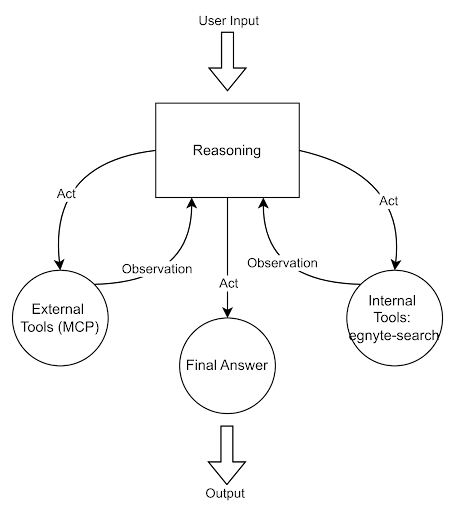

It’s built using LangGraph, a robust library for creating stateful, multi-actor applications with LLM. The agent's workflow is modeled as a ReAct loop, where it continuously cycles between reasoning (analyzing the state and deciding the next action with an LLM) and acting (executing tools like egnyte-search). This stateful architecture allows the agent to perform iterative refinement, using the results of a search on one turn to formulate a more precise, metadata-driven search on the next, mimicking the flow of a human researcher.

Next, we’ll explore the fundamental problems with search and how Egnyte Search Agent solves them.

From “Alex” to alex_smith: Decoding the Ambiguity of Natural Language

A query like "Find marketing presentation from Alex" is simple for a human but complex for a machine. It contains multiple entities (document type, owner) that need to be resolved.

Intent Decomposition and Tool Orchestration

The agent uses its underlying LLM to parse the user's intent and break it down into a sequence of actions using specific tools:

- Resolves ambiguity: It recognizes "Alex" as a person and calls the user-lookup tool with the query "Alex". There could be multiple people named Alex in the company.

- Loads context: The tool returns a list of potential users with their details, including their full name, which is loaded into the LLM context.

- Creates a precise query: The agent now formulates a precise search using the egnyte-search tool, setting the user name filter to the correct first and last name, the file type filter to “presentation”, and the query to “marketing”.

Instead of a failed keyword search for "Alex", the agent intelligently resolves the entity and uses that data to construct a precise, successful query.

Unlocking Hidden Power: The Problem of Invisible Metadata

Metadata tags (e.g., jurisdiction: "California") are the key to search precision, but the available schemas, sections, or properties are often not known by users, so they can't use the UI filters effectively.

Dynamic Metadata Discovery: How Search Agent Discovers Metadata You Didn’t Know Existed

The agent’s primary strategy is to uncover and utilize this structure on the user's behalf. For a query like "find approved contracts," its thought process is:

- Discover: "Okay, the user mentioned 'approved contracts.' This sounds like something I can filter on. I'll look for available metadata related to contracts." It calls the metadata-discovery tool, which identifies Egnyte contract properties as the most relevant metadata.

- Load context: "Great, I found the 'Egnyte Contract Properties' metadata. Now I need to see what filters are available inside it." It uses the schema-lookup tool to get the schema, learns that there is a status filter, and includes the schema into the LLM context.

- Precise query: "Perfect. I'll now search for files using the status filter set to 'approved'." It executes an egnyte-search call with that precise filter.

The user doesn't need to know anything about the metadata schema—the agent acts on their behalf, dynamically learning and applying the structure to get precise results.

Learning From Failures: Why Traditional Systems Never Learn

A human researcher learns from trial and error. If a search with the ‘status’ filter fails but a search with ‘proj_status’ succeeds, they remember that for next time. Traditional systems make the same mistake over and over.

The Context Learning Store: Dynamically Load Instructions to the Context

The agent learns from its mistakes and shares that knowledge across the entire organization. If a metadata filter attempt fails but a subsequent one succeeds, it records this insight when calling the results-curation tool by generating a "learning".

This instruction is saved to our Context Learning Store. The next time any user runs a query about project status, this learning is dynamically loaded into the agent's context, ensuring it uses the correct filter on the first try. The entire system gets collectively smarter with every interaction.

Beyond the API: Solving Problems Too Complex for a Single Query

No single API can support every conceivable combination of filters. A user might want to "find all PDFs and Word documents in the 'Q3 Campaigns' folder," a request that can't be fulfilled with a single standard API call.

Multi-Step Strategic Execution: Orchestrating Multi-Step Searches Like a Human Researcher

The agent acts as a smart orchestrator, chaining tools to accomplish what a single API call can’t by:

- Finding the folder: First, it uses egnyte-search with item type filter as folder, to find the folder's exact path.

- Searching within the folder: It then executes a second egnyte-search call, this time using the folder paths filter to restrict the search to that specific folder path and setting file type filter to “Document” and then another search for “PDF”.

The agent decomposes the user's complex request into a workflow of achievable steps, effectively extending the capabilities of the underlying search API.

Signal Through the Noise: Escaping the Keyword Haystack

Traditional keyword searches often end up returning multiple documents that contain a search term but lack the right context. Users are then forced to manually sift through low-relevance results to find the relevant document.

A Metadata-First Strategy

The agent is explicitly designed to avoid this problem by following a priority ladder in its reasoning.

- Prioritize structure over keywords: The agent’s first mandate is never to perform a broad keyword search if it detects any hint of structure in the query. It will always try to use a precise metadata filter first. This single design choice dramatically reduces noise from the very beginning.

- Iterative narrowing: If it must start with a broader search, its goal is to find a single result with relevant metadata. Once found, it immediately pivots, using that newfound metadata to launch a new, much narrower search. It actively seeks to escape the noise of keyword search in favor of the precision of structured filters.

This precision-first approach means the agent delivers a smaller, but vastly more relevant, set of initial results, saving the user from information overload.

Transparent Reasoning: The Quest for Explainable Search

The agent shows its reasoning at every step of the process.

- Thoughts: For every action it takes, from discovering metadata to shortlisting a result, the agent generates a human-readable thought. Users can follow its logical progression: "Okay, I'm looking for contracts. I'll start by checking the 'Egnyte Contract Properties' metadata..."

- Curating results: When using the result-curation tool, its thought explains why those specific documents were chosen and how they satisfy the user's criteria. If a search fails, it explains the failure and outlines its plan for the next step.

This transparency demystifies the search process, building user trust and empowering them to understand how the agent arrived at its conclusions.

The Specialist in the Room: Why Great Agents Need Great Tools

As we build more complex AI assistants, they need reliable tools to delegate search tasks. A Contract Analyst Agent, for example, shouldn't have to master the intricacies of file search—it just needs a definitive list of contracts to analyze.

Search Agent as a Tool

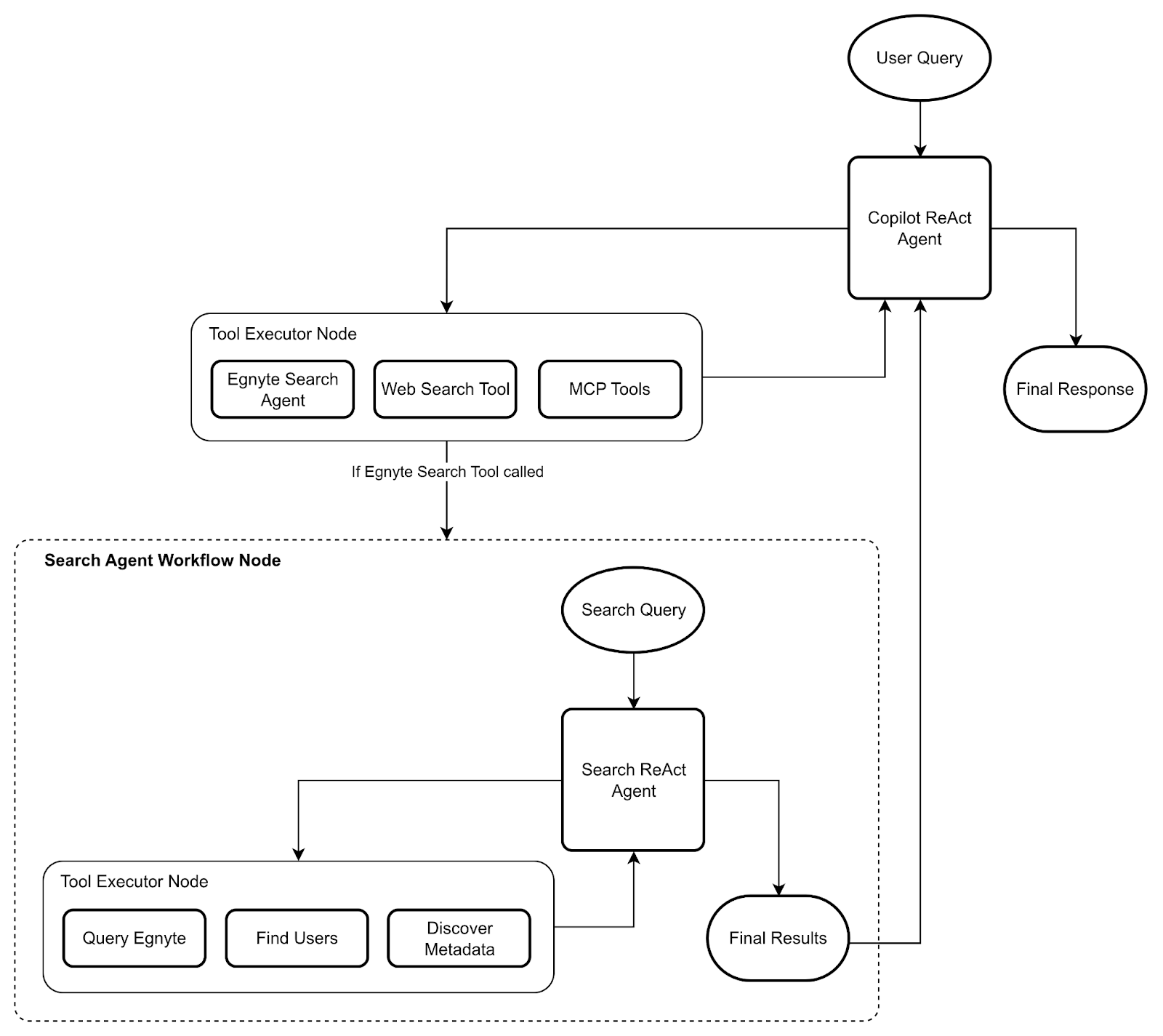

The Search Agent workflow is encapsulated as a single tool. This tool can be provided to the Egnyte Copilot orchestrator, which is built as a general-purpose ReAct workflow.

When Copilot decides to use the Search Agent tool, it branches to a node having the Search Agent workflow. Search Agent workflow is executed as a sub-graph to the Copilot workflow so that both share the same state. Here’s the integration flow.

- User request: A user asks Copilot, "Find all Master Service Agreements with Acme Corp executed in the last year and summarize their renewal dates."

- Copilot: Copilot sees the first part of the request is a file search. It calls its search-agent tool with the query.

- Delegation to Search Agent: When Copilot decides to call the search-agent tool, it branches to a step having the Search Agent workflow.

- Search Agent: Our Search Agent kicks into its full, self-contained, and independent ReAct loop. It discovers metadata, searches Egnyte, and stages the results.

- Curated results: The Search Agent completes its exhaustive search and returns clean, shortlisted results back to Copilot.

- Progress updates: Copilot returns the progress and reasoning with every step of its workflow, including the Search Agent workflow, since they both share their state. This makes the search process transparent to the user.

- Completing the broader task: Copilot now takes that list of files and proceeds with the second part of the user's request, such as calling a content-extraction tool to read the files and synthesize a summary.

Conclusion and the Road Ahead

This specialist Search Agent becomes a foundational capability for a higher-level orchestrator, like Egnyte Copilot. Copilot can now seamlessly delegate complex retrieval tasks, allowing it to focus on orchestrating multi-step workflows across different domains—from analyzing a contract found in Egnyte to finding information from Procore.

While prompt engineering and context management present significant challenges, the system is designed to be self-improving and transparent to users:

- Proactive guidance: We’re evolving the agent to move from reactive answering to proactive guidance. Soon, it will be able to ask clarifying questions when faced with ambiguity ("I found two 'Project Falcon' folders, which one did you mean?") and suggest relevant next steps based on the files it finds.

- Multimodal understanding: We’re working to equip the agent with multimodal capabilities, allowing it to understand queries about the content of images, audio, and video files, not just their metadata.

- Copilot integration: The Search Agent will become an integrated tool for the Egnyte Copilot, enabling it to perform content-aware actions and orchestrate complex business processes across the entire Egnyte platform and beyond.

This is a definitive step toward a future where agents become trusted, proactive partners in understanding and acting on the knowledge within a company's most valuable data.